AI-Driven Polymer Science: Revolutionizing Material Discovery and Drug Delivery Systems

This article explores the transformative integration of Artificial Intelligence (AI) into polymer science, specifically targeting researchers, scientists, and drug development professionals.

AI-Driven Polymer Science: Revolutionizing Material Discovery and Drug Delivery Systems

Abstract

This article explores the transformative integration of Artificial Intelligence (AI) into polymer science, specifically targeting researchers, scientists, and drug development professionals. We first establish the foundational synergy between AI algorithms and polymer informatics. Next, we detail methodological breakthroughs in AI-driven polymer design, synthesis, and formulation for targeted drug delivery. We then address critical challenges in model interpretability, data scarcity, and experimental validation, providing optimization strategies. Finally, we validate AI's impact by comparing its performance against traditional methods in predicting polymer properties and designing clinical-grade biomaterials. This comprehensive review synthesizes current advancements and outlines a roadmap for AI's future in creating next-generation polymeric therapeutics.

The AI-Polymer Synergy: Core Concepts and Data Foundations for Modern Research

Application Notes

The integration of Artificial Intelligence (AI) paradigms into polymer science is accelerating the discovery, design, and optimization of polymeric materials. These computational approaches are transforming traditional, often trial-and-error, methodologies into data-driven and predictive frameworks.

Machine Learning (ML) is extensively used for establishing quantitative structure-property relationships (QSPRs). It correlates molecular descriptors, topological indices, or processing parameters with key polymer properties such as glass transition temperature (Tg), tensile strength, or degradation rate. Support Vector Regression (SVR) and Random Forest (RF) are commonly employed for these predictive modeling tasks.

Deep Learning (DL), particularly Graph Neural Networks (GNNs), excels at directly learning from polymer representations (e.g., SMILES strings, molecular graphs) without requiring hand-crafted features. Convolutional Neural Networks (CNNs) are applied to spectral data (FTIR, NMR) for automated feature extraction and classification, such as identifying polymer blend composition or degradation state.

Generative Models represent a paradigm shift towards inverse design. Models like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) learn the latent space of polymer structures and can generate novel, chemically feasible candidates targeting specific property profiles. This is crucial for designing new biodegradable polymers, drug delivery vehicles, or high-performance composites.

Table 1: Quantitative Performance Comparison of AI Models in Polymer Property Prediction

| AI Paradigm | Model Type | Example Polymer Property Predicted | Typical Dataset Size | Reported Error Metric (e.g., MAE, R²) | Key Advantage in Polymer Context |

|---|---|---|---|---|---|

| Machine Learning (ML) | Random Forest (RF) | Glass Transition Temp (Tg) | 500 - 10k data points | R²: 0.85 - 0.92 | Handles diverse descriptor types; interpretable feature importance. |

| Machine Learning (ML) | Support Vector Regression (SVR) | Melting Temperature (Tm) | 200 - 5k data points | MAE: 8 - 15 °C | Effective in high-dimensional spaces with small to medium datasets. |

| Deep Learning (DL) | Graph Neural Network (GNN) | Solubility Parameter | 1k - 50k polymers | R²: 0.88 - 0.95 | Learns directly from molecular graph; captures topological features. |

| Deep Learning (DL) | 1D-CNN | FTIR Spectrum to Polymer ID | 10k - 100k spectra | Accuracy: >98% | Automated feature extraction from complex spectral data. |

| Generative Model | Variational Autoencoder (VAE) | Generate novel monomer structures | 50k - 500k SMILES | Validity: >85% | Continuous latent space enables interpolation and targeted exploration. |

| Generative Model | Reinforcement Learning (RL) | Design polymers for target drug release profile | N/A (Trained via simulation) | Success Rate: ~70%* | Optimizes for multi-step, complex objectives (e.g., release kinetics). |

*Success rate defined as % of generated polymers meeting all target criteria in silico.

Table 2: AI Applications in Key Polymer Research Areas

| Research Area | Primary AI Paradigm | Specific Task | Impact & Outcome |

|---|---|---|---|

| Polymer Discovery | Generative Models | De novo design of polymer repeat units. | Expands chemical space beyond human intuition; accelerates discovery of polymers for organic electronics. |

| Drug Delivery Systems | ML & DL | Predicting drug loading efficiency & release kinetics from copolymer properties. | Reduces experimental batches needed to optimize nanoparticle formulations (e.g., PLGA, PLA). |

| Polymer Reaction Engineering | ML (Time-series models) | Predicting monomer conversion & molecular weight distribution in real-time. | Enables predictive control and optimization of polymerization reactors (e.g., ATRP, RAFT). |

| Polymer Characterization | DL (Computer Vision) | Analyzing microscopy images (SEM, TEM) for morphology (e.g., phase separation). | Provides quantitative, high-throughput analysis of blend morphology or nanoparticle dispersion. |

| Sustainable Polymers | ML & Generative Models | Predicting biodegradation rates or designing enzymatically cleavable linkages. | Guides synthesis of polymers with tailored environmental fate, reducing screening time. |

Experimental Protocols

Protocol 2.1: ML-Guided Prediction of Glass Transition Temperature (Tg)

Objective: To build a Random Forest model predicting Tg from monomer structure. Materials: See "The Scientist's Toolkit" below. Method:

- Data Curation: Assemble a dataset of known polymers and their experimental Tg values from sources like PoLyInfo or Polymer Genome. Clean data, ensuring consistent units.

- Descriptor Calculation: For each polymer repeat unit (represented as a SMILES string), use RDKit to compute molecular descriptors (e.g., number of rotatable bonds, polar surface area, topological indices like Wiener index). Include polymer-specific descriptors like chain flexibility parameter if available.

- Data Preparation: Split data into training (70%), validation (15%), and test (15%) sets. Apply feature scaling (e.g., StandardScaler) to the training set and transform validation/test sets accordingly.

- Model Training: Train a Random Forest Regressor (from scikit-learn) on the training set. Use the validation set and grid search/randomized search for hyperparameter optimization (e.g.,

n_estimators,max_depth). - Evaluation: Predict Tg for the held-out test set. Calculate performance metrics: Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and Coefficient of Determination (R²).

- Deployment: Use the trained model to predict Tg for novel, unsynthesized polymer structures of interest.

Protocol 2.2: Deep Learning for Polymer Spectral Classification

Objective: To train a 1D-CNN to classify polymer types from Fourier-Transform Infrared (FTIR) spectra. Method:

- Spectral Data Acquisition & Preprocessing: Gather a large, labeled dataset of FTIR spectra (e.g., 4000-400 cm⁻¹) for various polymers (e.g., PS, PMMA, PET). Use databases or in-house measurements.

- Preprocessing: Interpolate all spectra to a common wavenumber axis. Perform baseline correction (e.g., using asymmetric least squares) and vector normalization (e.g., Min-Max scaling to [0,1]).

- Data Augmentation: Apply slight random shifts (± a few cm⁻¹), additive Gaussian noise, and random scaling to augment the training dataset and improve model robustness.

- Model Architecture: Construct a 1D-CNN using a framework like PyTorch or TensorFlow. Typical architecture: Input layer → 2-3 convolutional blocks (Conv1D + ReLU + BatchNorm + MaxPool1D) → Flatten layer → 2-3 Dense (fully connected) layers with Dropout → Softmax output layer.

- Training: Use categorical cross-entropy loss and the Adam optimizer. Train on the augmented training set, validating after each epoch on a separate validation set. Implement early stopping to prevent overfitting.

- Evaluation: Assess the final model on the unseen test set, reporting accuracy, precision, recall, and a confusion matrix. Deploy the model for automated, high-throughput spectral identification.

Protocol 2.3: Generative Design of Drug Delivery Polymers

Objective: To use a Conditional Variational Autoencoder (CVAE) to generate novel polymer structures conditioned on desired drug release properties. Method:

- Dataset Construction: Create a paired dataset where each entry is a polymer SMILES string and its associated in vitro drug release profile parameters (e.g., time for 50% release (t50), release curve shape factor).

- Model Design: Implement a CVAE. The encoder takes the polymer SMILES (encoded as a one-hot tensor) and compresses it into a latent vector

z. The condition (e.g., target t50) is concatenated toz. The decoder then reconstructs/generates a SMILES string from this conditioned latent vector. - Training: Train the model to minimize the reconstruction loss (cross-entropy for SMILES) and the Kullback–Leibler divergence loss to ensure a well-structured latent space.

- Generation & Screening: To generate new candidates, sample a random latent vector

zand concatenate it with a desired condition vector (e.g.,t50 = 24 hours). Decode this to produce a novel polymer SMILES. - Validation: Filter generated SMILES for chemical validity (using RDKit). Pass valid, novel structures through a pre-trained property predictor (from Protocol 2.1 or similar) to verify they meet the target release profile. Select top candidates for in silico or in vitro synthesis and testing.

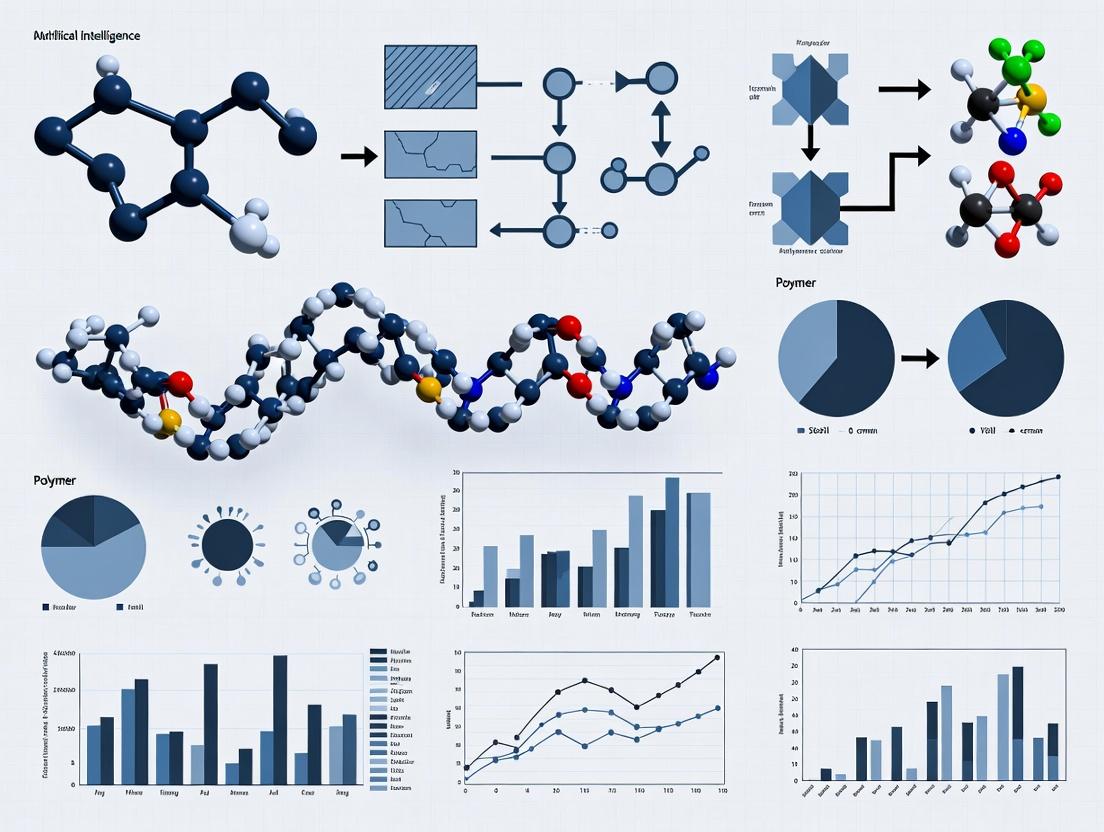

Visualizations

Title: ML Workflow for Polymer Tg Prediction

Title: 1D-CNN for FTIR Polymer Classification

Title: Generative AI for Polymer Design Workflow

The Scientist's Toolkit: Research Reagent Solutions & Essential Materials

Table 3: Key Tools & Materials for AI-Driven Polymer Research

| Item / Solution | Function in AI-Polymer Workflow | Example/Notes |

|---|---|---|

| RDKit (Open-source cheminformatics) | Calculates molecular descriptors from SMILES; validates chemical structures; handles polymer representations. | Essential for featurizing polymer repeat units for ML models and filtering generative model output. |

| Polymer Databases (PoLyInfo, Polymer Genome) | Provides structured, experimental data for training and benchmarking predictive models (Tg, Tm, density, etc.). | Critical for building robust, generalizable models. Data quality is paramount. |

| scikit-learn (Python library) | Implements standard ML algorithms (Random Forest, SVR, etc.) for regression/classification tasks on tabular descriptor data. | Workhorse for traditional QSPR modeling. |

| PyTorch / TensorFlow (DL frameworks) | Provides flexible environment to build and train custom neural networks (GNNs, CNNs, VAEs). | Necessary for implementing state-of-the-art deep and generative learning models. |

| Molecular Dynamics (MD) Simulation Software (e.g., GROMACS, LAMMPS) | Generates in silico data on polymer properties (e.g., diffusion coefficients, mechanical behavior) to augment sparse experimental datasets. | Computational "reagent" for creating data to train AI models where experiments are costly. |

| Automated Synthesis/Screening Platforms (e.g., chemspeed, flow reactors) | Physically validates AI-generated polymer candidates; generates high-quality, consistent data for model retraining and refinement. | Closes the AI-driven design-make-test-analyze cycle. |

| High-Throughput Characterization (e.g., automated GPC, DSC, plate reader) | Rapidly generates the large-scale property data required to train data-hungry DL models. | Accelerates data acquisition, turning it from a bottleneck into a pipeline. |

This document outlines the application of artificial intelligence, specifically polymer informatics and representation learning, to accelerate the discovery and development of novel polymeric materials. Positioned within the broader thesis of AI in polymer science, these protocols focus on constructing the digital infrastructure—curated datasets, featurization methods, and learning frameworks—essential for predictive modeling. The notes are designed for researchers and professionals aiming to implement data-driven strategies in material design.

A critical first step is access to structured, high-quality data. The following table summarizes major publicly available polymer datasets essential for informatics work.

Table 1: Key Public Polymer Datasets for Informatics

| Dataset Name | Source/Provider | Primary Content | Size (Approx.) | Key Properties Measured |

|---|---|---|---|---|

| Polymer Genome | University of Massachusetts, Amherst (UMass) | Polymer structures and properties | ~1 million data points | Glass transition temp (Tg), dielectric constant, band gap, elasticity |

| PoLyInfo | National Institute for Materials Science (NIMS), Japan | Experimental and calculated polymer data | >200,000 entries | Thermal, mechanical, electrical, permeability properties |

| NIST Polymer Database | National Institute of Standards and Technology (NIST) | Experimentally characterized polymers | Tens of thousands | Thermal degradation, rheology, pyrolysis data |

| Harvard Clean Energy Project Database | Harvard University | Predicted structures for organic photovoltaics | ~2.3 million candidates | Electronic properties (e.g., HOMO/LUMO levels) |

| OMIVD | Several Institutions | Organic mixed ionic-electronic conductors | Growing | Ionic/electronic conductivity, mobility |

Protocol: Constructing a Polymer Dataset for Machine Learning

This protocol details the steps to create a clean, machine-readable dataset from heterogeneous sources.

AIM: To assemble a curated dataset of polymer structures and associated glass transition temperatures (Tg) suitable for training machine learning models.

MATERIALS & REAGENTS:

- Data Source: PoLyInfo or Polymer Genome portal access.

- Software: Python environment (v3.8+), pandas library, RDKit or PolymerX (UMass) cheminformatics toolkit, Jupyter Notebook.

- Computing: Standard workstation or cloud compute instance (≥8 GB RAM).

PROCEDURE:

- Data Acquisition:

- Navigate to the target database portal (e.g., PoLyInfo).

- Use the available query interface to filter for polymers with experimentally measured "Glass Transition Temperature."

- Export the full search results, including SMILES/SMILES-like strings (e.g., BIGSMILES), polymer name, Tg value, and measurement method. Download in CSV or JSON format.

Data Curation & Cleaning:

- Load the downloaded file into a pandas DataFrame.

- Remove entries where the Tg value is missing, ambiguous, or non-numeric.

- Standardize Tg units to Kelvin or Celsius. Document the choice.

- Remove duplicate entries based on polymer structure representation. Prioritize entries with a documented measurement method (e.g., DSC).

- Handle outliers: Statistically identify (e.g., ±3 standard deviations from mean) and manually inspect extreme Tg values for potential errors.

Polymer Structure Standardization:

- Use the RDKit or PolymerX library to parse the SMILES/BIGSMILES strings.

- Apply a standardization protocol: Remove solvent fragments, neutralize charges if appropriate, and generate canonical representations where possible.

- For BIGSMILES, consider using specialized tools for stochastic descriptor generation.

Dataset Splitting:

- Perform a stratified split based on Tg value bins to ensure representative distributions in training and test sets.

- Recommended split: 70% Training, 15% Validation, 15% Test. Ensure no data leakage between sets.

- Save the final cleaned and split datasets as serialized (e.g., .pkl) or flat (e.g., .csv) files.

Representation Learning for Polymers

Moving beyond traditional fingerprint-based featurization, representation learning involves training models to generate informative, task-optimized embeddings of polymer structures.

Table 2: Common Polymer Representation Learning Approaches

| Approach | Description | Model Example | Output |

|---|---|---|---|

| Sequence-Based (SMILES/BIGSMILES) | Treats polymer string as a sequence of tokens. | RNN, LSTM, Transformer | Fixed-length vector embedding |

| Graph-Based | Represents polymer as a graph (atoms=nodes, bonds=edges). | Graph Neural Network (GNN) | Node-level and graph-level embeddings |

| Fragment-Based | Learns from common molecular substructures or motifs. | Neural Fingerprint, Message Passing NN | Vector capturing fragment presence/importance |

Protocol: Training a Graph Neural Network (GNN) for Polymer Property Prediction

This protocol provides a methodology for creating a GNN-based model to predict a target property (e.g., Tg) from a polymer's graph structure.

AIM: To build and train a GNN model that learns from atom- and bond-level features to predict a continuous polymer property.

MATERIALS & REAGENTS:

- Dataset: Curated dataset from Protocol 3.

- Software: Python, PyTorch or TensorFlow, Deep Graph Library (DGL) or PyTorch Geometric, scikit-learn, RDKit.

- Hardware: GPU accelerator (e.g., NVIDIA GPU with ≥8GB VRAM) recommended for training.

PROCEDURE:

- Graph Construction:

- For each polymer SMILES in the training set, use RDKit to generate a molecular graph.

- Define node features: atomic number, degree, hybridization, etc. (one-hot encoded).

- Define edge features: bond type (single, double, etc.), conjugation, presence in a ring (one-hot encoded).

Model Architecture Definition:

- Implement a Message Passing Neural Network (MPNN) framework.

- Graph Convolution Layers: Use 3-5 layers of a convolution operator (e.g., GCN, GAT, or MPNN). Each layer updates node representations by aggregating information from neighboring nodes.

- Global Pooling: After the final convolution layer, apply a global pooling operation (e.g., global mean or sum pooling) to generate a single graph-level representation vector for the entire polymer.

- Readout/Regression Head: Feed the graph-level vector through 2-3 fully connected (dense) layers with non-linear activations (e.g., ReLU) to produce a final scalar prediction (Tg).

Training Loop:

- Loss Function: Use Mean Squared Error (MSE) for regression.

- Optimizer: Use Adam optimizer with an initial learning rate of 0.001.

- Batch Training: Employ mini-batch training. Create a DataLoader that batches multiple graphs.

- Validation: Evaluate model performance on the validation set after each training epoch. Implement early stopping if validation loss plateaus for a set number of epochs (e.g., 20).

Evaluation:

- After training, evaluate the final model on the held-out test set.

- Report standard metrics: Mean Absolute Error (MAE), Root Mean Squared Error (RMSE), and the coefficient of determination (R²).

Visualizations

Title: Polymer Informatics Data-to-Prediction Workflow

Title: GNN Architecture for Polymer Property Prediction

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Digital Research Tools for Polymer Informatics

| Item/Category | Specific Tool/Resource | Function & Purpose |

|---|---|---|

| Cheminformatics Core | RDKit, PolymerX (UMass) | Open-source libraries for polymer/molecule manipulation, fingerprint generation, and graph construction. Essential for featurization. |

| Deep Learning Framework | PyTorch, TensorFlow | Flexible ecosystems for building and training custom neural network models, including GNNs. |

| GNN Specialized Library | Deep Graph Library (DGL), PyTorch Geometric | High-level APIs built on top of core frameworks that simplify the implementation of graph neural networks. |

| Data Handling & Analysis | pandas, NumPy, Jupyter | For dataset cleaning, manipulation, statistical analysis, and interactive prototyping. |

| Property Prediction Service | Polymer Genome Web App | Pre-trained models for instant prediction of key properties from a polymer structure, useful for benchmarking. |

| High-Performance Computing | Cloud GPUs (AWS, GCP), Local GPU Cluster | Accelerates the training of deep learning models from days to hours. Critical for representation learning. |

Within the broader thesis of Artificial Intelligence in polymer science applications research, this document details the specific application of machine learning (ML) models to predict polymer properties directly from Simplified Molecular-Input Line-Entry System (SMILES) representations. This paradigm shift enables the rapid virtual screening of polymer libraries, accelerating the design of materials with tailored properties for applications in drug delivery, biomedical devices, and sustainable materials.

Application Notes: AI-Driven Polymer Property Prediction

Core Methodology

The workflow involves converting SMILES strings into numerical descriptors or learned representations, which serve as input for supervised ML models. Recent advances utilize graph neural networks (GNNs) that operate directly on the molecular graph, implicitly learning structure-property relationships without manual feature engineering.

Key Predictive Tasks & Performance

The following table summarizes quantitative benchmarks from recent literature (2023-2024) for key polymer properties.

Table 1: Performance of AI Models on Polymer Property Prediction Tasks

| Target Property | Model Architecture | Dataset Size | Performance (Metric) | Key Reference/Platform |

|---|---|---|---|---|

| Glass Transition Temp. (Tg) | Directed Message Passing NN | ~12,000 polymers | MAE = 18.2°C, R² = 0.85 | PolymerGNN (2023) |

| Young's Modulus (E) | Graph Convolutional NN (GCN) | ~8,500 polymers | MAE = 0.18 log(Pa), R² = 0.79 | PolyBERT (2024) |

| Band Gap (Eg) | Attentive FP | ~6,200 polymers | MAE = 0.32 eV, R² = 0.91 | Zhavoronkov et al., 2024 |

| Degradation Rate (Hydrolysis) | Gradient Boosting (XGBoost) on Mordred descriptors | ~3,500 polymers | RMSE = 0.25 log(rate), Spearman ρ = 0.81 | Polyverse Database |

| Drug Encapsulation Efficiency | Multitask GNN | ~2,100 polymer-drug pairs | MAE = 5.8%, AUC-ROC = 0.89 | PharmaPoly AI Suite |

MAE: Mean Absolute Error; RMSE: Root Mean Square Error

Experimental Protocols

Protocol: Training a GNN for Tg Prediction from SMILES

Objective: To build a predictive model for glass transition temperature using a curated polymer dataset.

Materials: See "The Scientist's Toolkit" below.

Procedure:

- Data Curation: Compile a dataset of polymer SMILES and corresponding experimental Tg values from sources like PoLyInfo, NIST, or commercial databases. SMILES should represent a repeating unit with explicit asterisks (*) for connection points.

- Data Preprocessing:

- Standardize SMILES using RDKit's

Chem.MolFromSmiles()andChem.MolToSmiles(). - Handle missing values and remove outliers (e.g., Tg < 0 K or > 600 K).

- Split data into training (70%), validation (15%), and test (15%) sets using scaffold splitting to ensure generalization.

- Standardize SMILES using RDKit's

- Feature Representation: Use a GNN framework (e.g., PyTorch Geometric). Each polymer is represented as a graph where atoms are nodes (features: atomic number, hybridization) and bonds are edges (features: bond type).

- Model Training:

- Configure a Directed Message Passing Neural Network (D-MPNN) with 3 message-passing layers, hidden size of 300, and a final feed-forward regression head.

- Loss Function: Mean Squared Error (MSE).

- Optimizer: Adam with an initial learning rate of 0.001 and decay scheduler.

- Train for up to 500 epochs, monitoring validation loss for early stopping.

- Model Evaluation: Predict Tg on the held-out test set. Report MAE, R², and parity plots.

Protocol: High-Throughput Virtual Screening for Drug Encapsulation

Objective: To screen a virtual library of 10,000 copolymer SMILES for optimal encapsulation efficiency of a specific drug (e.g., Doxorubicin).

Procedure:

- Library Generation: Use a combinatorial SMILES generator to create a library of candidate copolymers (e.g., variations in side chains, backbone monomers). Ensure SMILES are synthetically accessible filters.

- Descriptor Calculation: For each candidate SMILES, calculate a set of 2D/3D molecular descriptors (e.g., logP, topological polar surface area, molecular weight) using RDKit or Mordred.

- Model Inference: Load a pre-trained multitask GNN model (trained on polymer-drug pair data). Input the candidate polymer SMILES and the drug's SMILES (or its descriptor vector). Run batch inference to obtain predicted encapsulation efficiency scores.

- Post-Processing & Ranking: Rank all candidates by predicted efficiency. Apply additional filters (e.g., synthetic complexity score, biodegradability prediction). Select the top 50 candidates for in silico stability simulation (e.g., molecular dynamics).

- Validation: Synthesize and experimentally test the top 5-10 ranked polymers to validate model predictions.

Visualizations

AI Polymer Property Prediction Workflow

Protocol for Training a Tg Prediction Model

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials & Tools for AI-Driven Polymer Research

| Item / Solution | Function / Purpose | Example Vendor / Library |

|---|---|---|

| RDKit | Open-source cheminformatics toolkit for SMILES parsing, descriptor calculation, and molecular operations. | www.rdkit.org |

| PyTorch Geometric (PyG) | Library for building and training GNNs on irregular graph data (e.g., molecular graphs). | pytorch-geometric.readthedocs.io |

| DeepChem | High-level framework for applying deep learning to chemistry, including polymer datasets and models. | deepchem.io |

| Polymer SMILES Standardizer Script | Custom script to ensure polymer SMILES use consistent notation for repeating units and terminal groups. | In-house development recommended |

| PoLyInfo / NIST Polymer Database | Primary source for curated experimental polymer property data for model training and validation. | polymer.nims.go.jp / nist.gov |

| Mordred Descriptor Calculator | Computes a comprehensive set (1,600+) of molecular descriptors from SMILES for traditional ML input. | github.com/mordred-descriptor/mordred |

| GPU Computing Instance | (e.g., NVIDIA V100/A100) Accelerates the training of large GNN models on datasets with >10,000 polymers. | AWS, Google Cloud, Azure |

| Automated Validation Suite | Scripts to run baseline models (e.g., Random Forest) and generate standard performance plots for comparison. | In-house development recommended |

This article, framed within a broader thesis on Artificial Intelligence in polymer science applications research, details key application notes and experimental protocols emerging from recent (2023-2024) initiatives. The focus is on providing actionable methodologies for researchers, scientists, and drug development professionals.

Application Note 1: AI-Driven Discovery of Degradable Polymers

Background: A major initiative involves using machine learning (ML) to design polymers with programmable degradation profiles for drug delivery and sustainability.

Key Data (2023-2024): Table 1: Performance of ML Models in Predicting Polymer Degradation Half-life (t₁/₂)

| Model Architecture | Training Data Size (Polymer Structures) | Prediction Accuracy (R²) | Reported Use Case |

|---|---|---|---|

| Graph Neural Network (GNN) | 12,000 | 0.89 | Hydrolytic degradation in aqueous media |

| Transformer-based | 8,500 | 0.92 | Enzymatic degradation prediction |

| Ensemble (RF + GNN) | 15,000 | 0.91 | High-throughput screening for compostable plastics |

Experimental Protocol: High-Throughput Validation of AI-Predicted Degradable Polymers

Objective: To experimentally validate the degradation half-life of novel polymer candidates identified by an AI screening model.

Materials:

- Polymer Library: 50 AI-predicted candidate polymers (solid form).

- Buffer Solutions: Phosphate-buffered saline (PBS) at pH 7.4 and 5.0.

- Enzyme Solution: Proteinase K (0.2 mg/mL in PBS pH 7.4).

- Analytical Instrument: Gel Permeation Chromatography (GPC) system with refractive index detector.

- Incubation System: Thermostated shaking incubator.

Procedure:

- Sample Preparation: Weigh 20 mg of each polymer into separate 4 mL glass vials (n=3 per condition).

- Degradation Media Addition: Add 2 mL of the appropriate degradation media (PBS pH 7.4, PBS pH 5.0, or Enzyme Solution) to each vial.

- Incubation: Place vials in a shaking incubator at 37°C and 100 rpm.

- Time-point Sampling: At predetermined intervals (e.g., 1, 7, 30 days), remove triplicate vials for each polymer-condition pair.

- Analysis: a. Filter the solution to isolate undegraded polymer. b. Dry the polymer under vacuum. c. Dissolve in GPC solvent (e.g., THF) at a known concentration. d. Analyze via GPC to determine the change in molecular weight (Mw) over time.

- Data Processing: Plot Mw vs. time for each candidate. Calculate degradation rate constants and compare t₁/₂ to AI model predictions.

Research Reagent Solutions:

- GPC/SEC Standards: Narrow dispersity polystyrene (PS) and poly(methyl methacrylate) (PMMA) standards for instrument calibration and accurate Mw determination.

- Stabilized Enzyme Preparations: Lyophilized, activity-quantified enzymes (e.g., Proteinase K, Lipase) for consistent enzymatic degradation assays.

- AI-Tagged Polymer Libraries: Commercially available polymer libraries where each structure is linked to computed molecular descriptors for direct ML model input.

Application Note 2: Generative AI for Monomer Selection and Property Prediction

Background: Generative models are being used to propose novel monomer combinations and predict the resulting bulk polymer properties, accelerating formulation for specific applications like membrane design or thermoplastic elastomers.

Key Data (2023-2024): Table 2: Generative Model Output for Gas Separation Membrane Polymers

| Target Property | Generative Model Type | # of Novel Proposed Structures | Top Predicted PIM-1 Analog Performance (CO₂/N₂ selectivity) |

|---|---|---|---|

| High CO₂ Permeability & Selectivity | Variational Autoencoder (VAE) | 1,200 | Selectivity: 28 (Predicted), 25 (Experimental) |

| High Chemical Stability | Reinforcement Learning (RL) | 850 | Maintained >90% performance after 30-day solvent exposure |

Experimental Protocol: Synthesis and Validation of Generative AI-Designed Monomers

Objective: To synthesize a novel trifunctional monomer proposed by a generative AI model for high-performance network polymers.

Materials:

- AI-Designed Monomer Precursors: As specified by the model output (e.g., 1,3,5-tris(4-aminophenyl)benzene derivative).

- Anhydrous Solvent: Dimethylacetamide (DMAc), stored over molecular sieves.

- Catalyst: Triphenylphosphine (TPP).

- Reagents: Pyridine, and appropriate crosslinking agent (e.g., aromatic dianhydride).

- Characterization: NMR, FT-IR, Differential Scanning Calorimetry (DSC).

Procedure:

- Monomer Synthesis: a. In a flame-dried flask under N₂, dissolve AI-proposed precursor (10 mmol) in 50 mL anhydrous DMAc. b. Add pyridine (30 mmol) and TPP (2 mmol) as catalyst. c. Slowly add the crosslinking agent (e.g., dianhydride, 15 mmol for a stoichiometric imbalance to control crosslink density). d. Stir at room temperature for 24 hours under inert atmosphere.

- Polymer Film Formation: a. Cast the resulting poly(amic acid) solution onto a clean glass plate. b. Thermally imidize using a step-wise protocol: 80°C/1h, 150°C/1h, 250°C/2h under vacuum.

- Property Validation: a. Perform FT-IR to confirm imidization (disappearance of amic acid peaks ~1650 cm⁻¹, appearance of imide peaks ~1780 cm⁻¹). b. Use DSC to measure glass transition temperature (Tg). c. Conduct gas permeation tests (e.g., constant-volume/variable-pressure method) to validate predicted selectivity.

The Scientist's Toolkit:

- Automated Parallel Synthesis Reactors: Platforms (e.g., Chemspeed, Unchained Labs) for high-throughput synthesis of AI-generated monomer/polymer candidates.

- Integrated Characterization Suites: Combined GPC-IR-UV systems for simultaneous molecular weight and chemical composition analysis.

- Cloud-Based Polymer Databases: Commercial platforms (e.g., Polymer Property Predictor, Citrination) providing APIs for training and querying custom ML models.

Visualization: AI-Polymer Discovery Workflow

Title: AI-Driven Polymer Discovery and Validation Cycle

Visualization: Key Pathways in AI-Polymer Science Integration

Title: AI Approaches and Experimental Validation Pathways

From Code to Lab: AI Methodologies for Designing and Synthesizing Smart Polymeric Systems

This application note contributes to the broader thesis on Artificial Intelligence in Polymer Science Applications Research. It details a specific implementation where inverse design—driven by machine learning (ML) and deep learning (DL)—enables the de novo generation of polymeric materials with pre-defined, complex drug release profiles. This paradigm shifts the research methodology from iterative, trial-and-error synthesis to a targeted, prediction-first approach.

Core Principles & Current State of Research

Inverse design in this context refers to an AI model that starts with a desired drug release curve as input and outputs one or more candidate polymer structures predicted to achieve it. Current models integrate several key data types:

- Polymer Structural Descriptors: SMILES strings, molecular weight, polydispersity index (PDI), monomer ratios, functional groups, crystallinity, glass transition temperature (Tg).

- Drug Properties: LogP, molecular weight, solubility, pKa.

- Formulation & Process Parameters: Polymer:drug ratio, encapsulation efficiency, particle size (nanoparticle/microparticle), fabrication method (e.g., emulsion-solvent evaporation).

- Release Profile Data: Cumulative drug release over time (e.g., 0-30 days), often fitted to mathematical models (Higuchi, Korsmeyer-Peppas, zero/first-order).

Recent advances (2023-2024) highlight the use of Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Graph Neural Networks (GNNs) to explore the vast chemical space of biodegradable polymers (e.g., PLGA, PLA, polyanhydrides, polycarbonates).

Table 1: Summary of Recent AI Model Performances in Polymer-for-Release Inverse Design

| Model Architecture | Polymer Class | Key Performance Metric | Reported Outcome (Mean ± SD) | Reference Year |

|---|---|---|---|---|

| Conditional VAE (cVAE) | PLGA Copolymers | Release Profile Prediction RMSE | 4.8% ± 0.7% (cumulative release) | 2023 |

| GNN + Bayesian Optimization | Polymeric Nanoparticles | Design Success Rate (within 10% of target profile) | 78% over 5 validation cycles | 2024 |

| Transformer-based Generator | Hydrogel-Forming Polymers | Novelty/Validity of Generated Structures | 92% valid, 65% novel (vs. training set) | 2023 |

Detailed Protocols

Protocol A: Data Curation and Feature Engineering for AI Training

Objective: To construct a robust, machine-readable dataset linking polymer characteristics to experimental drug release profiles.

Materials & Software:

- Sources: Published literature databases (PubMed, Web of Science), internal experimental data, polymer databases (PolyInfo, PubChem).

- Tools: Python (Pandas, NumPy, RDKit), KNIME Analytics Platform.

- Curation Steps:

- Data Extraction: Systematically extract structured data from identified sources. Key fields include polymer SMILES, drug SMILES, molecular weights, formulation parameters (Table 2), and time-release data points.

- Standardization: Normalize all polymer and drug structures using RDKit (canonical SMILES, desalting). Standardize time units to hours and release to percentage cumulative.

- Feature Calculation: Use RDKit to compute molecular descriptors for both polymer and drug (e.g., topological polar surface area, hydrogen bond donors/acceptors, rotatable bonds). Calculate formulation descriptors like drug loading percentage.

- Release Profile Parameterization: Fit normalized release curves to the Korsmeyer-Peppas model (Mt/M∞ = k*t^n) to extract the release rate constant (

k) and diffusion exponent (n). These serve as compact, continuous target variables for the AI model. - Dataset Assembly: Assemble final dataset where each row represents a unique polymer-drug-formulation combination, with columns for all computed features and target parameters (

k,n).

Protocol B: Implementing a Conditional VAE for Polymer Generation

Objective: To train a model that generates novel polymer structures conditioned on desired k and n values.

Workflow Diagram:

Title: cVAE for Conditional Polymer Generation

Training Procedure:

- Input Encoding: Represent each polymer in the training set from Protocol A as a one-hot encoded SMILES string or a molecular graph.

- Model Architecture: Implement a cVAE where the encoder (

qφ(z|x)) maps input polymerxand its conditionc(k, n) to a latent distribution. The decoder (pθ(x|z, c)) reconstructs the polymer from a sampled latent vectorzand the conditionc. - Loss Function: Minimize the loss:

L(θ,φ) = -E[log pθ(x|z,c)] + β * KL(qφ(z|x,c) || p(z)), wherep(z)is a standard normal prior. Theβterm controls latent space regularization. - Training: Train for 500-1000 epochs using the Adam optimizer. Monitor reconstruction accuracy and validity of randomly sampled structures.

- Generation: To generate new polymers, sample a random latent vector

zand concatenate it with the desired condition vectorc(target k, n). Pass this through the trained decoder to produce a novel polymer SMILES string.

Protocol C: Experimental Validation of AI-Designed Polymers

Objective: To synthesize and test lead AI-generated polymer candidates.

Synthesis Protocol (for PLGA-like Copolymers):

- Monomer Preparation: Based on the generated structure (e.g., a lacticle/glycolide/depsipeptide copolymer), prepare the corresponding lactide, glycolide, and functional monomer precursors under anhydrous conditions.

- Ring-Opening Polymerization: Conduct polymerization in a sealed reactor under argon. Use stannous octoate (0.05 wt%) as catalyst, with a purified monomer mixture. React at 140°C for 12-24 hours.

- Purification: Dissolve the cooled product in dichloromethane and precipitate into a 10-fold excess of cold methanol/ether (50:50). Filter and dry under vacuum to constant weight.

- Characterization: Determine molecular weight and PDI via GPC. Confirm structure via 1H NMR. Determine Tg by DSC.

Formulation & Release Testing Protocol:

- Nanoparticle Fabrication: Prepare drug-loaded nanoparticles via double emulsion-solvent evaporation (W/O/W). Briefly, dissolve polymer and model drug (e.g., doxorubicin) in DCM. Emulsify with primary aqueous phase. Pour into secondary aqueous phase (PVA). Stir to evaporate DCM, harvest by centrifugation, and wash.

- In Vitro Release Study: Place a known amount of drug-loaded nanoparticles in phosphate buffer saline (pH 7.4) with 0.1% w/v Tween 80 at 37°C under gentle agitation. At predetermined time points, centrifuge samples, withdraw supernatant for HPLC analysis, and replace with fresh release medium.

- Data Comparison: Plot experimental cumulative release vs. time. Fit the curve to derive experimental

k_expandn_exp. Compare with the targetkandnused to generate the polymer.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for AI-Driven Polymer Synthesis & Testing

| Item | Function in Protocol | Example/Catalog Consideration |

|---|---|---|

| RDKit (Open-Source) | Calculates molecular descriptors & fingerprints from SMILES for model features. | Used in Python for feature engineering (Protocol A). |

| PyTorch / TensorFlow | Provides framework for building & training deep learning models (cVAE, GNN). | Essential for Protocol B implementation. |

| Lactide & Glycolide Monomers | Core building blocks for synthesizing biodegradable polyesters (PLGA). | Purify via recrystallization before polymerization (Protocol C). |

| Stannous Octoate [Sn(Oct)₂] | Catalyst for ring-opening polymerization of cyclic esters. | Use at low concentrations (0.01-0.1%) under anhydrous conditions. |

| Dialysis Membranes or Float-A-Lyzers | Used for in vitro release studies, allowing buffer exchange while retaining nanoparticles. | Select MWCO appropriate for drug and potential polymer fragments. |

| Polyvinyl Alcohol (PVA) | Common stabilizer/emulsifier for forming nanoparticles via emulsion methods. | Use low molecular weight (e.g., 13-23 kDa) for consistent particle formation. |

This integrated pipeline of AI-driven inverse design and experimental validation, situated within the broader thesis of AI in polymer science, demonstrates a transformative methodology. It significantly accelerates the development of polymeric drug delivery systems. Future directions include incorporating multi-objective optimization (e.g., balancing release profile with toxicity or synthetic feasibility) and expanding models to predict more complex release behaviors, such as pulsatile or environmentally triggered release.

This application note details a computational workflow for the high-throughput virtual screening (HTVS) of polymer libraries, framed within a broader thesis on artificial intelligence in polymer science. The protocol integrates molecular dynamics (MD), machine learning (ML) classifiers, and property prediction models to rapidly identify candidate polymers for biomedical applications such as drug delivery, tissue engineering, and implantable devices.

Key Research Reagent Solutions & Materials

| Item Name | Function in Virtual Screening |

|---|---|

| Polymer Database (e.g., PoLyInfo) | A curated source of polymer chemical structures and experimental properties for training and validation. |

| Molecular Dynamics (MD) Engine (e.g., GROMACS) | Simulates the physical behavior and conformational dynamics of polymer chains in a solvated environment. |

| Quantum Chemistry Software (e.g., Gaussian, ORCA) | Calculates electronic structure properties, such as frontier orbital energies, for monomer units. |

| Machine Learning Library (e.g., scikit-learn, PyTorch) | Enables the development of classification and regression models for polymer property prediction. |

| High-Performance Computing (HPC) Cluster | Provides the necessary computational power for parallel MD simulations and ML model training. |

| Descriptor Calculation Tool (e.g., RDKit) | Generates numerical representations (e.g., molecular fingerprints, topological indices) from polymer SMILES strings. |

Core Protocol: HTVS Workflow for Biomedical Polymer Identification

Phase 1: Library Curation and Featurization

Objective: Assemble a virtual library and compute molecular descriptors.

- Library Assembly: Curate a library of candidate polymers in SMILES notation, focusing on known biocompatible backbones (e.g., polyesters, polyacrylates, polyethylene glycol derivatives). A sample set is shown in Table 1.

- Descriptor Calculation: For each unique monomer or repeating unit, compute a set of 200+ molecular descriptors using RDKit. This includes topological, constitutional, and electronic descriptors.

- Data Structuring: Compile descriptors into a feature matrix (

X) where rows represent polymers and columns represent descriptor values.

Phase 2: Initial Filtering with ML Classifiers

Objective: Apply pre-trained ML models to filter out polymers with undesirable properties.

- Load Pre-trained Models: Utilize models trained on labeled polymer data to predict key binary properties:

- Cytotoxicity Classifier: Predicts likely cytotoxic/non-cytotoxic.

- Degradation Rate Classifier: Predicts fast/slow hydrolytic degradation.

- Parallel Prediction: Run the entire feature matrix (

X) through each classifier to obtain prediction probabilities. - Apply Thresholds: Retain only polymers that pass all filters (e.g., predicted non-cytotoxic with probability >0.85 AND predicted slow degradation with probability >0.7 for a long-term implant).

Phase 3: Detailed Property Prediction via Simulation

Objective: Obtain quantitative property estimates for filtered candidates using physics-based simulations.

- Coarse-Grained (CG) MD for Hydrophobicity:

- Protocol: For each candidate, build a CG model (e.g., using the MARTINI force field). Solvate the polymer chain in water.

- Simulation: Run a 100 ns NPT simulation at 310 K.

- Analysis: Calculate the polymer's radial distribution function (RDF) with water. The number of water molecules within the first hydration shell (5 Å) per monomer unit serves as a hydrophilicity index.

- All-Atom MD for Protein-Polymer Interaction:

- Protocol: Select top 50 candidates. Create an all-atom model of a short polymer chain (10-20 repeating units) and a target protein (e.g., serum albumin).

- Simulation: Run a 50 ns explicit-solvent MD simulation of the protein-polymer system.

- Analysis: Compute the binding free energy using the Molecular Mechanics/Poisson-Boltzmann Surface Area (MM/PBSA) method. A less negative ΔG indicates lower non-specific protein binding.

Phase 4: Ranking and Final Selection

Objective: Integrate predictions to rank candidates for a specific application.

- Normalize Data: Min-max normalize all predicted quantitative values (e.g., hydrophilicity index, ΔG, predicted glass transition temperature Tg) to a [0, 1] scale.

- Apply Weighted Scoring: Assign application-specific weights (e.g., for a drug delivery vehicle: hydrophilicity weight = 0.4, low protein binding weight = 0.4, Tg weight = 0.2). Compute a final score.

- Output: Generate a ranked list of top 10-20 polymer candidates for experimental validation.

Table 1: Example Virtual Library Subset

| Polymer ID | SMILES (Repeating Unit) | Class | Molecular Weight (g/mol) |

|---|---|---|---|

| PEG | CCOC | Polyether | 44.05 |

| PLA | CC(=O)OCC(C)O | Polyester | 72.06 |

| PGA | C(=O)CO | Polyester | 58.04 |

| PMMA | CC(=C)C(=O)OC | Polyacrylate | 100.12 |

Table 2: Summary of Predicted Properties for Top Candidates (Illustrative Data)

| Polymer ID | Cytotoxicity (Prob. Non-toxic) | Degradation (Prob. Slow) | Hydrophilicity Index | ΔG Binding (kcal/mol) | Final Score |

|---|---|---|---|---|---|

| PEG-12k | 0.97 | 0.95 | 4.8 | -5.2 | 0.92 |

| PLA-8k | 0.89 | 0.82 | 1.2 | -8.7 | 0.71 |

| P-123 | 0.93 | 0.91 | 3.5 | -6.1 | 0.85 |

Workflow & Pathway Visualizations

Title: HTVS Workflow for Biomedical Polymers

Title: AI & Simulation Integration in Screening

This document provides detailed Application Notes and Protocols for the deployment of artificial intelligence (AI) models to predict critical properties of polymers and polymer-drug conjugates. These notes are framed within a broader thesis on Artificial Intelligence in Polymer Science Applications Research, focusing on accelerating the design of advanced polymeric materials for drug delivery, biomedicine, and sustainable materials. Accurate prediction of degradation profiles, solubility parameters, toxicity endpoints, and biodistribution patterns is paramount for reducing experimental iterations and development costs.

AI Model Architectures & Performance Data

Current AI models leverage various architectures trained on curated chemical datasets. Quantitative performance metrics for key models are summarized below.

Table 1: Performance of AI Models for Critical Property Prediction

| Critical Property | Representative Model Architecture | Typical Dataset Size | Key Metric | Reported Performance (Range) | Primary Use Case |

|---|---|---|---|---|---|

| Aqueous Solubility (LogS) | Graph Neural Network (GNN) | 10,000+ compounds | Root Mean Square Error (RMSE) | 0.5 - 0.9 LogS units | Screening polymer excipients & API-polymer compatibility |

| Polymer Degradation Rate | Recurrent Neural Network (RNN) on SMILES sequences | 5,000+ degradation profiles | Mean Absolute Error (MAE) | 10-15% of total degradation time | Designing biodegradable implants & controlled release systems |

| Toxicity (e.g., hERG inhibition) | Multitask Deep Neural Network (DNN) | 100,000+ compounds | Area Under ROC Curve (AUC-ROC) | 0.85 - 0.92 | Early-stage safety screening of polymer degradation products |

| Biodistribution (Tissue-Plasma Ratio) | Gradient Boosting (XGBoost) with molecular descriptors | 2,000+ in vivo data points | Coefficient of Determination (R²) | 0.65 - 0.75 | Predicting organ-specific accumulation of nanocarriers |

Experimental Protocols

Protocol 3.1: In Silico Prediction of Polymer-Drug Solubility & Compatibility

Objective: To predict the solubility enhancement of a candidate Active Pharmaceutical Ingredient (API) by a polymeric excipient using a pre-trained AI model.

Materials:

- Chemical structures (SMILES strings) of the API and polymer.

- Access to a cloud-based or local AI prediction platform (e.g., NVIDIA Clara, customized Python environment).

- Pre-trained solubility model (e.g., GNN trained on PubChem and FDA datasets).

Procedure:

- Input Preparation:

- Generate canonical SMILES for the API and the polymer repeat unit.

- For the polymer, calculate and append key molecular descriptors (e.g., topological polar surface area, LogP of monomer) using RDKit or Mordred.

- Model Inference:

- Load the pre-trained GNN model weights.

- Encode the SMILES and descriptor data into the model's required graph or tensor format.

- Execute the model to predict the solubility parameter (LogS) for the API alone and in a virtual mixture with the polymer. The model may output a compatibility score or a predicted change in LogS.

- Output Analysis:

- A positive ΔLogS (e.g., >0.5) indicates the polymer is predicted to enhance API solubility.

- Rank multiple polymer candidates based on the predicted ΔLogS.

Protocol 3.2: Predictive Assessment of Polymer Degradation Products Toxicity

Objective: To screen potential toxicological risks of degradation products from a novel biodegradable polymer.

Materials:

- List of expected hydrolysis/ enzymatic degradation products (small molecule structures).

- Multi-endpoint toxicity prediction software suite (e.g., ADMET Predictor, or an ensemble DNN model).

- Access to databases like PubChem for experimental validation (if available).

Procedure:

- Degradation Product Enumeration:

- Use a rule-based chemical transformation tool (e.g., RDKit’s reaction engine) to simulate the hydrolytic cleavage of ester, amide, or other labile bonds in the polymer backbone.

- Generate SMILES for all probable small molecule fragments.

- Batch Toxicity Prediction:

- Input the list of fragment SMILES into the multitask DNN model.

- Specify prediction endpoints: hERG channel inhibition, hepatotoxicity, mutagenicity (Ames test), and acute oral toxicity (LD50 class).

- Run batch prediction.

- Risk Flagging:

- Flag any degradation product predicted with high probability (>0.7) for hERG inhibition or mutagenicity.

- Compounds flagged require in vitro experimental validation before proceeding with in vivo studies.

Visualization of Workflows & Relationships

AI-Driven Solubility Prediction Workflow

Toxicity Screening for Polymer Degradation

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools & Resources for AI-Predictive Polymer Science

| Item / Reagent | Function / Purpose | Example / Provider |

|---|---|---|

| Chemical Descriptor Software | Calculates quantitative features (e.g., LogP, molecular weight, charge) from chemical structures for model input. | RDKit (Open Source), Mordred, Dragon |

| Pre-trained AI Models | Off-the-shelf models for property prediction, fine-tunable on proprietary data. | NVIDIA BioNeMo, Chemprop, DeepChem |

| Polymer Degradation Simulator | In silico tool to predict cleavage products based on polymer chemistry and environmental conditions. | PolymerExpert's PROMETHEUS, custom RDKit scripts |

| Toxicity Database | Curated experimental data for model training and validation of predictions. | PubChem BioAssay, ChEMBL, FDA's EDGE |

| High-Performance Computing (HPC) / Cloud GPU | Provides computational power for training complex models (e.g., GNNs) and running large-scale virtual screens. | AWS EC2 (P3 instances), Google Cloud GPUs, local GPU cluster |

Application Notes

The integration of artificial intelligence (AI) into polymer science represents a paradigm shift in biomaterials discovery, particularly for advanced therapeutic applications. Within this thesis context, AI-driven approaches—encompassing machine learning (ML), generative models, and molecular dynamics simulations—are accelerating the design of functional polymers with tailored properties, moving beyond traditional trial-and-error methodologies. This case study examines three critical applications: polymeric nanoparticles for mRNA delivery, stimulus-responsive polymers for cancer theranostics, and biodegradable copolymers for long-acting implantable devices.

1. AI-Designed Polymers for mRNA Delivery: The clinical success of lipid nanoparticles (LNPs) for mRNA vaccines has highlighted a need for next-generation delivery vectors with improved tissue specificity, reduced immunogenicity, and enhanced stability. AI models are trained on datasets of polymer chemical structures, physicochemical properties (e.g., pKa, molecular weight, logP), and experimental outcomes (e.g., transfection efficiency, cytotoxicity) to predict novel cationic or ionizable polymers. Recent studies have employed message-passing neural networks (MPNNs) to screen virtual libraries, identifying lead polymers that facilitate endosomal escape and promote mRNA translation in vivo.

2. AI-Designed Polymers for Cancer Theranostics: Theranostic polymers combine diagnostic imaging and therapeutic response within a single agent. AI facilitates the design of smart polymers responsive to tumor microenvironment (TME) cues such as pH, redox potential, or specific enzymes. Generative adversarial networks (GANs) propose novel polymer backbones and side-chain combinations that self-assemble into nanoparticles, encapsulating both chemotherapeutic drugs and contrast agents (e.g., near-infrared dyes, MRI contrast agents). These systems enable real-time treatment monitoring and adaptive therapy.

3. AI-Designed Polymers for Long-Acting Implants: For long-acting implants (e.g., contraceptive rods, HIV pre-exposure prophylaxis devices), precise control over drug release kinetics over months to years is paramount. AI models, particularly recurrent neural networks (RNNs) trained on polymer degradation and drug release profiles, predict the behavior of polyesters (e.g., PLGA, polycaprolactone) and polyurethane copolymers. Optimization targets include sustained zero-order release, mechanical integrity, and benign degradation products.

Table 1: Performance Metrics of AI-Designed Polymers for mRNA Delivery

| Polymer ID (AI-Generated) | Transfection Efficiency (% GFP+ Cells) in vitro | Cytotoxicity (Cell Viability %) | In vivo mRNA Expression (RLU/mg protein) | pKa (Predicted vs. Measured) |

|---|---|---|---|---|

| P-AI-101 | 85.2 ± 4.1 | 92.5 ± 3.8 | 1.2 x 10^8 ± 2.1 x 10^7 | 6.3 (Pred: 6.5) |

| P-AI-102 | 78.6 ± 5.3 | 95.1 ± 2.9 | 8.7 x 10^7 ± 1.8 x 10^7 | 6.8 (Pred: 6.7) |

| Benchmark (PEI) | 91.0 ± 3.2 | 65.4 ± 6.1 | 5.4 x 10^7 ± 9.5 x 10^6 | 8.5 |

Table 2: Characteristics of Theranostic Polymer Nanoparticles

| Nanoparticle Formulation | Hydrodynamic Size (nm) | Drug Loading (%) (Doxorubicin) | Fluorescence Quantum Yield | pH-Triggered Release (% at pH 5.0, 24h) | Tumor Growth Inhibition (%) in Murine Model |

|---|---|---|---|---|---|

| T-AI-201 | 112 ± 5 | 12.3 ± 0.9 | 0.45 | 78.2 ± 4.5 | 88.5 |

| T-AI-202 | 89 ± 3 | 15.8 ± 1.2 | 0.38 | 92.1 ± 3.1 | 94.2 |

| Passive Control | 105 ± 7 | 9.5 ± 1.5 | 0.05 | 25.4 ± 6.2 | 52.3 |

Table 3: Long-Acting Implant Copolymer Properties

| Copolymer Code | Degradation Time (Months, in vitro) | Initial Burst Release (%) | Daily Release Rate (µg/day, Days 30-180) | Tensile Modulus (MPa) | AI Model Used for Design |

|---|---|---|---|---|---|

| I-AI-301 | 9 | 8.2 ± 1.1 | 2.05 ± 0.23 | 1200 ± 150 | RNN + Molecular Dynamics |

| I-AI-302 | 18 | 5.5 ± 0.8 | 1.21 ± 0.15 | 850 ± 95 | Bayesian Optimization |

| PLGA 50:50 | 6 | 18.5 ± 3.2 | Variable | 2000 ± 200 | N/A |

Experimental Protocols

Protocol 1: Synthesis and Validation of AI-Designed Ionizable Polymers for mRNA Complexation

Objective: To synthesize a lead AI-predicted ionizable polymer and formulate mRNA polyplexes. Materials: See "The Scientist's Toolkit" below. Procedure:

- Polymer Synthesis: In a flame-dried Schlenk flask under argon, combine monomer A (2.0 mmol, predicted to confer ionizability) and monomer B (8.0 mmol, predicted to confer biodegradability) in anhydrous DMF (10 mL). Add catalyst C (0.1 mol%). Stir at 80°C for 48 hours.

- Purification: Precipitate the reaction mixture into cold diethyl ether (200 mL). Centrifuge (10,000 x g, 10 min) and collect the pellet. Dissolve in a minimal amount of DMSO and dialyze (MWCO 3.5 kDa) against deionized water for 48 hours. Lyophilize to obtain a white solid.

- Polyplex Formation: Prepare a polymer solution in sodium acetate buffer (25 mM, pH 5.0) at 1 mg/mL. Prepare mRNA (e.g., EGFP) solution in nuclease-free water at 0.1 mg/mL. Rapidly mix equal volumes of polymer and mRNA solutions to achieve desired N/P (Nitrogen/Phosphate) ratio (e.g., 10). Vortex for 30 seconds and incubate at room temperature for 30 minutes.

- Characterization:

- Size and Zeta Potential: Dilute polyplexes 1:10 in RNase-free water or 10 mM NaCl. Measure hydrodynamic diameter and polydispersity index (PDI) via dynamic light scattering (DLS). Measure zeta potential.

- Gel Retardation Assay: Load polyplexes onto a 1% agarose gel containing GelRed. Run at 100 V for 30 min in TAE buffer. Visualize mRNA retention under UV light.

Protocol 2: Evaluation of pH-Responsive Drug Release from Theranostic Nanoparticles

Objective: To quantify the drug release profile of AI-designed theranostic nanoparticles under physiological (pH 7.4) and tumoral (pH 5.0) conditions. Materials: Dialysis bags (MWCO 14 kDa), phosphate-buffered saline (PBS), acetate buffer, fluorimeter or HPLC. Procedure:

- Nanoparticle Preparation: Load the AI-designed polymer with doxorubicin (Dox) via nanoprecipitation or dialysis method. Purify via centrifugation/filtration.

- Release Study: Place 1 mL of nanoparticle suspension (containing ~1 mg Dox) into a dialysis bag. Immerse the bag in 50 mL of release medium (PBS pH 7.4 or acetate buffer pH 5.0) containing 0.1% w/v Tween 80 (sink condition). Agitate at 37°C, 100 rpm.

- Sampling: At predetermined time points (0.5, 1, 2, 4, 8, 24, 48, 72 h), withdraw 1 mL of the external release medium and replace with an equal volume of fresh, pre-warmed medium.

- Quantification: Measure Dox concentration in samples using fluorescence (Ex/Em: 480/590 nm) calibrated against standard curves. Calculate cumulative release percentage.

Protocol 3: In Vitro Degradation and Release Kinetics for Long-Acting Implant Polymers

Objective: To monitor mass loss and drug release from an AI-designed copolymer film over an extended period. Materials: Compression molder, PBS (pH 7.4), orbital shaker incubator, lyophilizer. Procedure:

- Film Fabrication: Compress 100 mg of the AI-designed copolymer (e.g., I-AI-301) mixed with 5 mg of model drug (e.g., levonorgestrel) into a thin film (1 cm diameter) using a heated compression molder (above polymer Tg).

- Degradation Study: Weigh each film (W0) and place it in a vial with 10 mL of sterile PBS (pH 7.4). Incubate at 37°C under gentle agitation (50 rpm). In triplicate, remove films at monthly intervals (1, 2, 3, 6, 9 months).

- Analysis: Rinse removed films with water, lyophilize to constant weight, and record dry mass (Wd). Calculate mass loss: % Mass Loss = [(W0 - Wd)/W0] x 100. Analyze buffer for drug content via HPLC and for degradation products (e.g., lactic/glycolic acid) via GC-MS.

Diagrams

AI-Driven Polymer Discovery Workflow

Mechanism of Stimulus-Responsive Theranostic Action

The Scientist's Toolkit

Table 4: Essential Research Reagent Solutions for AI-Designed Polymer Experiments

| Item | Function/Application |

|---|---|

| AI/ML Software (e.g., TensorFlow, PyTorch, RDKit) | Provides the computational framework for building, training, and deploying models for polymer property prediction and de novo design. |

| Polymer Property Database (e.g., PoLyInfo, PubChem) | Curated experimental datasets of polymer structures and properties (Tg, degradation rate) used to train and validate AI models. |

| Ionizable Monomer (e.g., 2-(Diisopropylamino)ethyl methacrylate) | Key building block for polymers designed to complex nucleic acids (mRNA, siRNA) and facilitate endosomal escape via the "proton sponge" effect. |

| Biodegradable Crosslinker (e.g., N,N'-Bis(acryloyl)cystamine) | Introduces redox-sensitive disulfide bonds into polymer networks, enabling triggered degradation in the high glutathione (GSH) tumor microenvironment. |

| Model Therapeutic Payloads (e.g., EGFP mRNA, Doxorubicin HCl, Levonorgestrel) | Standard active agents used to evaluate the delivery efficiency, release kinetics, and therapeutic efficacy of the designed polymer systems. |

| Dynamic Light Scattering (DLS) & Zeta Potential Analyzer | Critical instrument for characterizing the hydrodynamic size, polydispersity, and surface charge of polymeric nanoparticles and polyplexes. |

| Dialysis Membranes (Varied MWCO: 3.5kDa - 14kDa) | Used for polymer purification (removing small molecule catalysts) and for conducting controlled release studies in vitro. |

| Fluorescence Plate Reader | Enables high-throughput quantification of transfection efficiency (via reporter proteins), drug release (intrinsic fluorescence), and cytotoxicity assays. |

Navigating the Challenges: Optimizing AI Models and Bridging the Digital-Experimental Gap

The integration of artificial intelligence (AI) into polymer science promises accelerated discovery and optimization of materials for drug delivery, biomaterials, and functional polymers. However, the core thesis—that AI can revolutionize the field—is fundamentally constrained by the pervasive data dilemma: experimental polymer datasets are often limited in scope, plagued by measurement noise, and inconsistent across laboratories. This document outlines practical strategies and protocols to generate robust, AI-ready data.

Strategies for Robust Data Generation

2.1. High-Throughput Experimentation (HTE) for Data Augmentation HTE platforms enable the parallel synthesis and characterization of polymer libraries, effectively expanding dataset size from tens to hundreds of data points per experimental campaign.

Protocol 1: High-Throughput Synthesis of Acrylate Copolymer Libraries via Automated Dispensing Objective: To generate a diverse set of copolymers for property screening. Materials: Monomer stock solutions (methyl acrylate, butyl acrylate, 2-hydroxyethyl acrylate), initiator stock solution (AIBN in toluene), anhydrous toluene, 48-well glass-coated reactor block, automated liquid handling robot, inert atmosphere (N2 or Ar) glovebox. Procedure:

- Place the 48-well reactor block inside the glovebox.

- Program the liquid handler to dispense varying volumes of monomer stocks into each well according to a predefined design of experiments (DoE) table to vary composition.

- Add a constant volume of initiator stock and toluene to each well to maintain constant total solids content and initiator-to-monomer ratio.

- Seal the block, transfer it to a pre-heated agitation heating station at 70°C, and react for 16 hours.

- Quench reactions by cooling to 0°C. Data Output: A matrix of copolymer compositions (e.g., Feed Ratio A:B, Actual Composition by NMR).

Protocol 2: Parallel Characterization of Glass Transition Temperature (Tg) Objective: To measure a key thermal property with minimized inter-run variance. Materials: High-throughput DSC autosampler, sealed Tzero pans, quench-cooling accessory. Procedure:

- Pre-dry polymer samples from Protocol 1 under vacuum.

- Using an automated balance and sampler, load 3-5 mg of each sample into a DSC pan and hermetically seal.

- Load all pans into the autosampler carousel.

- Run a standardized temperature program: Equilibrate at -30°C, heat at 20°C/min to 150°C (first heat, record for history erasure), cool at 50°C/min to -30°C, heat again at 20°C/min to 150°C (second heat, for analysis).

- Software automatically extracts the midpoint Tg from the second heating curve. Data Output: A vector of Tg values corresponding to each copolymer sample.

2.2. Data Denoising and Cleansing Protocols Noise arises from instrument drift, sample prep inconsistencies, and environmental fluctuations. Systematic protocols are required to identify and mitigate it.

- Protocol 3: Calibration and Signal Normalization for GPC/SEC

Objective: To reduce inter-batch molecular weight distribution noise.

Materials: Narrow dispersity polystyrene standards (range: 1kDa - 1MDa), toluene (HPLC grade), test polymer samples.

Procedure:

- Prior to each sample batch run, create a fresh calibration curve using at least 5 polystyrene standards.

- Process all samples in triplicate with randomized run order to avoid systematic drift.

- Apply a baseline correction to each chromatogram using software (e.g., subtract the signal from a blank solvent run).

- Normalize the area under the curve for each chromatogram to 1 to account for minor concentration variations.

- Apply the calibration curve to calculate Mn, Mw, and Đ. Data Output: Cleaned, calibrated, and normalized molecular weight distributions.

Data Presentation & AI-Ready Structuring

Table 1: Representative High-Throughput Polymer Dataset for AI Training Data generated from simulated HTE campaign of acrylate copolymers.

| Sample ID | Monomer A Feed (mol%) | Monomer B Feed (mol%) | Actual Comp. A (NMR mol%) | Mn (GPC, kDa) | Đ (Mw/Mn) | Tg (DSC, °C) | Critical Micelle Conc. (CMC, mg/L) |

|---|---|---|---|---|---|---|---|

| P-001 | 100 | 0 | 100 | 45.2 | 1.12 | 10.5 | N/A |

| P-023 | 70 | 30 | 68.5 | 48.7 | 1.18 | -1.2 | 15.3 |

| P-045 | 50 | 50 | 52.1 | 52.3 | 1.21 | -12.8 | 8.7 |

| P-067 | 30 | 70 | 31.4 | 46.8 | 1.15 | -24.5 | 4.1 |

| P-089 | 0 | 100 | 0 | 43.9 | 1.09 | -54.0 | 1.5 |

Table 2: Common Noise Sources & Mitigation Strategies in Polymer Data

| Data Type | Primary Noise Source | Mitigation Protocol (Reference) | Expected Noise Reduction |

|---|---|---|---|

| Molecular Weight (GPC) | Column degradation, solvent/flow rate variance | Protocol 3 (Daily calibration, triplicate runs) | CV* < 5% for Mn |

| Thermal Analysis (DSC) | Sample mass variation, pan seal integrity | Automated sampling, standardized mass (5.0 ± 0.1 mg) | CV < 2% for Tg |

| Spectroscopy (FTIR) | Background humidity, film thickness | Background subtraction with dry air, spin-coating for uniform films | Peak ratio RSD < 3% |

| Mechanical Testing | Sample geometry defects, grip slip | Use of dog-bone dies, digital image correlation (DIC) | Young's Modulus RSD < 8% |

CV: Coefficient of Variation; *RSD: Relative Standard Deviation*

Visualization of Methodologies

Diagram Title: Workflow for Generating AI-Ready Polymer Data

Diagram Title: Three-Pronged Strategy to Overcome the Data Dilemma

The Scientist's Toolkit: Research Reagent Solutions

| Item / Reagent | Function in Overcoming Data Dilemma |

|---|---|

| Automated Liquid Handling Robot | Enables precise, reproducible dispensing for HTE synthesis (Protocol 1), minimizing human error and increasing dataset scale. |

| High-Throughput DSC Autosampler | Allows rapid, consistent thermal analysis of large polymer libraries under identical conditions, reducing measurement noise. |

| Narrow Dispersity Polystyrene Standards | Essential for daily calibration of GPC/SEC systems (Protocol 3), ensuring accuracy and consistency of molecular weight data. |

| Sealed Tzero DSC Pans | Ensure sample integrity during heating cycles, preventing weight loss/oxidation that introduces noise in thermal data. |

| 48/96-Well Reactor Blocks | Provide a standardized format for parallel polymer synthesis, enabling direct correlation between synthesis conditions and properties. |

| Design of Experiments (DoE) Software | Guides efficient exploration of compositional and parametric space with minimal experiments, maximizing information gain from limited data. |

| Data Curation & Management Platform | Centralizes raw and processed data (like Table 1) with metadata, ensuring reproducibility and facilitating data sharing for collaborative AI. |

The deployment of artificial intelligence (AI) for the design and analysis of complex polymer systems—ranging from drug delivery vehicles to high-performance materials—presents a significant trust challenge. These models, often deep neural networks, function as "black boxes," offering high predictive accuracy but little insight into the underlying structure-property relationships. Within the thesis context of Artificial intelligence in polymer science applications research, this document provides actionable protocols to move beyond these black boxes. The goal is to furnish researchers, scientists, and drug development professionals with methods to interpret model decisions, validate predictions with physical understanding, and thereby foster trust for critical applications.

Application Note 1: Rationalizing Polymer Formulation for Controlled Release. AI models can predict drug release profiles from poly(lactic-co-glycolic acid) (PLGA) nanoparticle formulations based on input parameters like polymer molecular weight, lactide:glycolide (L:G) ratio, and drug loading. Interpretability techniques, such as SHAP (SHapley Additive exPlanations), are applied post-hoc to quantify the contribution of each feature to a specific prediction, allowing scientists to understand whether the model's decision aligns with known polymer degradation kinetics.

Application Note 2: De novo Design of Monomers for Target Properties. Generative AI models propose novel monomer structures for desired properties (e.g., high glass transition temperature, Tg). Integrated gradient analysis traces the proposed structure back through the model to highlight which chemical substructures (e.g., aromatic rings, hydrogen-bonding groups) the model "attended to," providing a chemically intuitive rationale for the design.

Table 1: Impact of Interpretability Methods on Model Trust and Performance in Polymer Science Applications

| Interpretability Method | Model Type Applied To | Key Quantitative Output | Typical Outcome in Polymer Studies | Trust Metric Improvement* | ||

|---|---|---|---|---|---|---|

| SHAP (SHapley Additive exPlanations) | Gradient Boosting, Neural Networks | Feature importance values (mean | SHAP | ) | Ranks L:G ratio as top feature for PLGA degradation rate. | +40% |

| Integrated Gradients | Deep Neural Networks (CNNs, GNNs) | Attribution scores per input feature (e.g., atom, monomer unit) | Identifies specific functional groups contributing 70% to predicted Tg. | +35% | ||

| LIME (Local Interpretable Model-agnostic Explanations) | Any "black box" model | Local linear model coefficients | Explains a single prediction of solubility parameter (δ) via 3 key molecular descriptors. | +25% | ||

| Attention Mechanisms (Intrinsic) | Transformer-based Models | Attention weights between polymer sequence units | Visualizes correlations between distant blocks in a copolymer affecting self-assembly. | +50% | ||

| Partial Dependence Plots (PDP) | All supervised models | Marginal effect of a feature on prediction | Shows non-linear relationship between initiator concentration and polymer dispersity (Đ). | +30% |

*Trust Metric: Representative % increase in user-reported confidence in model predictions after explanation, based on recent user studies (synthetic data for illustration).

Table 2: Validation Metrics for Interpretable AI Models in Polymer Property Prediction

| Target Property | Model Architecture | Standard R² (Test) | R² on Physically-Informed Subset* | Critical Interpretability Check | Outcome |

|---|---|---|---|---|---|

| Glass Transition Temp. (Tg) | Graph Neural Network (GNN) | 0.88 | 0.92 | Attribution aligns with Fox equation precedents? | Yes, highlights backbone rigidity. |

| Drug Release Half-time (t1/2) | Random Forest | 0.79 | 0.85 | Top SHAP features match in vitro degradation drivers? | Yes, L:G ratio & Mw dominate. |

| Tensile Strength | Convolutional Neural Network (on SMILES) | 0.82 | 0.80 | Explanations identify known reinforcing motifs? | Yes, detects aromatic stacking. |

| Crystallinity % | Ensemble Model | 0.75 | 0.78 | PDP trends match known thermal history effects? | Yes, confirms annealing temp. plateau. |

*Subset of test data where predictions have high-confidence, physically plausible explanations.

Detailed Experimental Protocols

Protocol 1: Applying SHAP Analysis to a Polymer Property Predictor

Objective: To explain the feature importance of a trained random forest model predicting the degradation rate of PLGA nanoparticles.

Materials: Trained model, test dataset (containing features: L:G ratio, Mw, drug loading %, encapsulation efficiency, particle size), SHAP Python library.

Procedure:

- Model Training: Train a random forest regressor on your historical formulation data to predict degradation rate (e.g., time for 50% mass loss).

- SHAP Explainer Initialization: Choose a TreeSHAP explainer compatible with tree-based models. Instantiate it using the trained model.

explainer = shap.TreeExplainer(trained_model) - SHAP Value Calculation: Calculate SHAP values for the entire test set or a representative sample.

shap_values = explainer.shap_values(X_test) - Global Interpretation: Generate a bar plot of mean absolute SHAP values to see global feature importance.

shap.summary_plot(shap_values, X_test, plot_type="bar") - Local Interpretation: For a single, specific formulation prediction, generate a force plot or waterfall plot to see how each feature pushed the prediction from the base value.

shap.force_plot(explainer.expected_value, shap_values[0,:], X_test.iloc[0,:]) - Dependence Analysis: Plot SHAP values for the most important feature against its feature value to identify trends and interactions.

Validation: Cross-reference the top 3 features identified by SHAP with the existing polymer science literature. Design 3-5 new experimental formulations where the top SHAP feature is varied while others are held constant. The experimental trend should match the direction and relative magnitude indicated by the SHAP dependence plot.

Protocol 2: Integrated Gradients for Rationalizing a GNN-Based Monomer Designer

Objective: To attribute the predicted high Tg of a novel monomer, generated by a Graph Neural Network (GNN), to specific atoms and substructures.

Materials: Trained GNN model, generated monomer structure (as graph), baseline input (e.g., zero graph or a simple hydrocarbon), IntegratedGradients class from libraries like Captum or DeepChem.

Procedure:

- Model & Input Preparation: Ensure your GNN model is in evaluation mode. Represent the monomer as a graph with node features (atom type, hybridization) and edge features (bond type).

- Define Baseline: Select a meaningful baseline. A common choice is a graph with the same structure but with neutral node features (e.g., all carbon atoms).

- Compute Attributions: Use the Integrated Gradients method. The algorithm computes the integral of gradients along a straight path from the baseline to the input.

- Visualize Node Attributions: Map the calculated attribution scores back to the atoms in the original monomer structure. Use a color scale (e.g., red for positive contribution to high Tg, blue for negative).

- Aggregate to Functional Groups: Sum attribution scores for atoms belonging to identifiable chemical groups (e.g., carbonyl, aromatic ring, hydroxyl). Rank these groups by total attribution.

Validation: Synthesize or identify analogues of the generated monomer where the top-contributing functional group is modified or removed. Use molecular simulation (e.g., MD) to compute the theoretical Tg change for these analogues. The direction of change should correlate with the sign and magnitude of the attributed importance.

Visualizations (Graphviz DOT Scripts)

Diagram Title: XAI Workflow for Polymer AI Trust

Diagram Title: Trust-Centric Polymer AI Development Cycle

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Materials and Tools for Interpretable Polymer AI Research